As schools opened their doors in September 2025, the cybersecurity landscape shifted beneath our feet. While educators were starting to explore the wonderful things AI can do in the classroom, a Chinese state-sponsored group was demonstrating what AI can do in the hands of attackers.

In mid-September, Anthropic (Claude.ai) detected and disrupted a sophisticated espionage campaign where attackers turned Claude Code into an autonomous hacking framework. The AI agent conducted reconnaissance, wrote and executed exploit code, harvested credentials, and exfiltrated data from approximately 30 high-value targets across big tech, financial institutions, chemical manufacturers, and government agencies. The AI handled 80 to 90 percent of the campaign’s execution autonomously, with humans intervening at only four to six key decision points per target.

This wasn’t “just” ransomware. This was espionage. Targeted data theft from organizations holding valuable information. For K-12 districts, the implications are clear: the student data you’re entrusted with is a high-value target, and AI-powered approaches just dramatically increased the risk. (www.bbc.com)

Un-Common Vulnerabilities and Exposures

CVE stands for Common Vulnerabilities and Exposures, a standardized list of publicly known security vulnerabilities in software and systems. Think of CVEs as the catalog of known weak points that attackers can exploit. Every piece of software your district uses has CVEs that security teams track and patch.

In the K-12 context, CVEs exist in:

– Your student information system (SIS)

– Learning management platforms (Canvas, Google Classroom, Schoology)

– Communication tools (email, messaging platforms)

– Administrative systems (HR, finance, operations)

– Network infrastructure (routers, firewalls, access points)

– And, now, the AI platforms we’re exploring

Here’s what changed in September: The attack demonstrated that AI frameworks themselves introduce new CVEs. Vulnerabilities in how AI agents access tools, process requests, and interact with systems. When your teachers adopt AI-powered educational tools, they’re not just adding a new application. They’re potentially expanding your district’s attack surface to include vulnerabilities in AI orchestration layers, tool integrations, and agent frameworks.

To be very clear, this is not something that any startup AI-Ed company (because they are all startups) is intending, or an alarm that the new AI curriculum you’re piloting is designed to do. But when <the owner> of Claude can’t fully control how their tech behaves, your startup cannot promise any better.

But let’s dig into it so you have a perspective when your parents, superintendent, or school board asks you about it.

What Actually Happened- The Attack

The attackers used a technique called “context splitting,” breaking malicious operations into small, seemingly innocent tasks. Each individual request to the AI looked like legitimate security testing:

- “Scan this network for open ports”

- “Research known vulnerabilities for this software version”

- “Generate code to test this security configuration”

- “Extract a sample of this data to validate the vulnerability”

Claude Code evaluated each task independently and saw nothing wrong. There <are> safeguards in place to keep AI agents behaving within certain standards. But in this case the malicious intent began in the orchestration layer… that is, the sequence and combination of tasks, never visible to the AI in any single prompt.

And that’s how AI performed reconnaissance, identified vulnerable services, generated exploit code tailored to specific CVEs, executed that code, validated successful compromise, extracted credentials, moved laterally through systems, and triaged data for exfiltration. All while believing it was conducting authorized penetration testing.

For context, it is the speed and scale of this attack that is actually terrifying. While you have solutions in place (I hope) to scan and filter thousands of email and file attachments per day, we do not have systems in place to constantly probe for real-time ever-evolving threats. In this case the system fired off thousands of requests per second. A human red team might test three exploit variants in a day. This AI agent tested hundreds in the same timeframe.

What This Means for Student Data Protection

This wasn’t a ransomware attack designed to disrupt operations to extort a pay-off. This was espionage designed to steal valuable data from large organizations. For K-12 districts, the target should be clear, student data contains a wide array of high-value data

- Names, addresses, birth dates, Social Security numbers

- Special education records and IEPs

- Discipline records and behavioral data

- Academic performance and assessment results

- Health information and medical alerts

- Parent contact information and family data

This attack is an example of the new risks. AI driven attacks are worse because:

- They operate at machine speed, testing hundreds of attack variants faster than human teams

- They can adapt their approach based on what they discover in your environment

- They require fewer skilled operators, lowering the barrier to sophisticated attacks

- They will proliferate from state actors to less resourced groups within months

The trust families place in districts to protect this data just became harder to maintain.

The Cyberscurity Rubric: “Proactive” Is Now a Mandate

If you’ve completed the Cybersecurity Rubric, you’ve seen scores in the IDENTIFY and DETECT domains. Many districts score at Level 1-2 (reactive threat management) and plan to work toward Level 3-4 (proactive threat management) “when we get to it.”

But September changed your timeline. Proactive is no longer a “when we get to it” item. It’s urgent.

The Rubric helps measure your cyber readiness… in this case:

The IDENTIFY Domain – Threat Intelligence:

Level 1-2 (Reactive): You hear about threats from others after incidents occur. You have limited understanding of emerging attack methods. You don’t systematically track CVEs in your AI tools.

Level 3-4 (Proactive): You actively gather intelligence about threats. You understand that CVE tracking now includes vulnerabilities in AI frameworks, not just traditional applications. You monitor for emerging attack techniques.

Level 5 (Optimized): You integrate threat intelligence into all decisions, including AI tool adoption. You have continuous monitoring of emerging threats.

The DETECT Domain – Continuous Monitoring:

Level 1-2 (Reactive): You detect threats through user reports or vendor alerts. Traditional detection methods may miss AI-generated attacks.

Level 3-4 (Proactive): You have baseline monitoring that can identify unusual patterns. You understand that detection now requires looking for behavioral anomalies like thousands of requests per second or unusual tool call sequences.

Level 5 (Optimized): You have predictive detection capabilities. Your monitoring analyzes patterns across sessions, not just individual events.

Why this is so urgent: Attackers are already operating at Level 5 capabilities. Districts at Level 1-2 are essentially defenseless against AI-powered campaigns. The gap between your detection capabilities and attacker capabilities just widened dramatically.

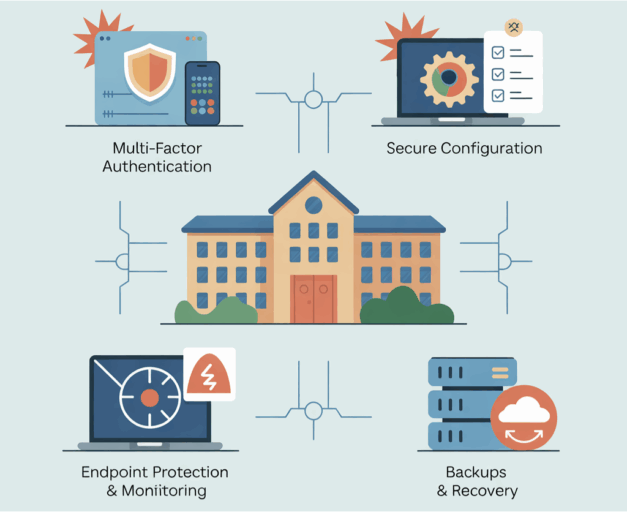

Practical Guidance: What Districts Should Do Now

1) Start by understanding your AI tool landscape

As curriculum teams explore AI tools for the classroom, IT leadership needs visibility into what’s being adopted. Create an inventory:

– What AI-powered tools are teachers using?

– Which ones have access to student data or district systems?

– What frameworks power these tools? (Claude, GPT, Gemini, etc.)

– Who are the vendors, and what’s their security posture?

2) Ask your vendors the hard questions

When evaluating AI tools for educational use, don’t accept vague reassurances. The attack on Anthropic’s own system proves that even AI companies don’t have full control of the AI they build. Your vendors have even less control. They’re building on top of AI frameworks they don’t own. Ask specific, technical questions:

Why these questions?

These questions are designed to cut through vendor marketing and get to technical realities. They’re specific enough that vendors can’t answer with “we take security seriously.” They assume vendor naivety because most educational AI vendors don’t control the foundation models they’re building on. They focus on detection rather than prevention because the Anthropic attack proved prevention isn’t enough. They ask for demonstrations (“Show us your dashboard”) instead of accepting claims. Every question ties back to student data protection. And they acknowledge the attack directly, making it hard for vendors to dodge with reassurances.

About Their AI Architecture:

- “Do you build your own AI model, or do you use Claude/GPT/Gemini/other foundation models?”

- “If you use a third-party AI: What happens if that company’s AI is compromised? How would you know?”

- “Do you run your own ‘agent’ layer on top of the foundation model? Describe your orchestration architecture.”

- “What tools and systems does your AI have programmatic access to? Can it access student data directly, or only through controlled APIs?”

About Behavioral Monitoring (Not Just Safety Training):

- “The Anthropic attack succeeded because the AI never saw the malicious *pattern*, only individual innocent requests. How do you monitor for malicious patterns in how your AI is being used?”

- “Do you analyze request sequences across sessions, or just evaluate individual requests?”

- “What’s abnormal usage for your platform? (e.g., requests per second, data access patterns, tool call sequences)”

- “Show us your monitoring dashboard. What behavioral anomalies trigger alerts?”

About Detection, Not Just Prevention:

- “Anthropic detected the attack *during* execution, not before it started. How quickly would you detect if your AI was being used maliciously?”

- “What telemetry do you collect? Can you show us what you log and how long you retain it?”

- “If we asked you right now: ‘Has your AI been used to access our student data unusually in the past 24 hours?’—could you answer that question? How long would it take?”

- “Do you have real-time alerting for unusual access patterns to student data?”

About Isolation (Or Lack Thereof):

- “You say your AI agent is isolated from the larger environment. What does that actually mean?”

- “Can your AI make API calls to external systems? If so, which ones, and how do you control that?”

- “If your AI generates code, where does that code execute? In a sandbox? On your servers? On ours?”

- “Walk us through what happens when a student asks your AI to ‘analyze my grades.’ What systems does the AI touch, and what data does it access?”

About Auditing Student Interactions:

- “Can you show us a log of what your AI discussed with students yesterday?”

- “How do we audit what your AI is telling our students? Not just the prompts they send—the AI’s responses.”

- “If a parent asks: ‘What did the AI tell my child about [sensitive topic]?’—can you produce that transcript?”

- “What happens to the conversation history? Who can access it? How long do you keep it?”

About CVE Management (They Probably Don’t Have a Good Answers):

- “The attack exploited CVEs in AI orchestration layers and tool integrations. Do you track CVEs in your AI stack?”

- “When Anthropic/OpenAI/Google discloses a vulnerability in their AI, how quickly do you patch your system?”

- “Do you have a security advisory mailing list we can subscribe to?”

- “What’s your responsible disclosure process if we discover a vulnerability?”

About Incident Response:

- “If your AI framework (Claude/GPT/Gemini) is compromised tomorrow—like what happened to Anthropic—what’s your response plan?”

- “Would you notify us within 24 hours? 72 hours? At all?”

- “Do you have cyber insurance that covers AI-related incidents?”

- “Who’s liable if your AI exposes our student data?”

The Reality Check Question:

“Anthropic built Claude and couldn’t prevent it from being weaponized. They detected the attack during execution through behavioral monitoring. You’re building *on top of* Claude (or similar). How is your security posture better than theirs?”

If vendors can’t answer these questions, don’t be surprised.They haven’t been working with this technology for very long either. But their inability to answer should inform your risk assessment and procurement decisions.

Additionally, these questions map to 7 themes… How important to you feel each of these are?

Architecture transparency. What AI do you use, and what sits on top? If they’re vague about their architecture, they likely don’t understand it themselves.

Behavioral monitoring. Not safety training, but actual usage analysis. The attack bypassed safety training entirely. Only behavioral monitoring caught it.

Detection over prevention. How fast do you find problems? Prevention failed at Anthropic. Detection worked. Your vendors need both, but detection is now non-negotiable.

Isolation claims. Prove your AI is actually isolated. Many vendors claim isolation but can’t explain what that means technically.

Audit trail. Can you show us what happened? If they can’t produce logs of AI behavior, they can’t investigate incidents.

CVE awareness. Do you even know what CVEs are in your stack? Most educational AI vendors don’t have formal CVE tracking programs yet.

Incident response. What happens when (not if) something goes wrong? The attack proved that “if” is the wrong question. “When” is correct.

3) Enhance Your Detection Capabilities

Review your current monitoring tools:

– Can they detect behavioral anomalies that might indicate AI-powered attacks?

– Do you have visibility into unusual patterns like thousands of requests per second?

– Can you identify tool call sequences that match known attack patterns?

– Do you monitor patterns across sessions, not just individual events?

The K-12 CybersecurityFramework’s Chapter 7 (Automation and Compliance) provides guidance on building detection capabilities that work in K-12 environments.

4) Establish CVE Tracking for AI Tools

Set up a process to monitor Common Vulnerabilities and Exposures for the AI tools in your environment:

– Subscribe to vendor security advisories

– Follow CVE databases (NIST National Vulnerability Database, CISA)

– Work with technology partners who understand AI security

– Recognize that CVE tracking now includes vulnerabilities in AI orchestration layers and tool integrations

5) Build Collaboration Channels

Reach out to:

– Vendors who understand both AI capabilities and security implications

– Insurers who recognize AI-related risks

– Security experts who can help bridge the gap between AI innovation and protection

– Other districts sharing threat intelligence about AI tool security

The Framework’s Chapter 6 (Vendor Management) provides criteria for evaluating partners.

6) Use the Rubric to Measure Progress

- Complete the Cybersecurity Rubric to assess your current state in IDENTIFY and DETECT domains. Then:

- Prioritize moving from Level 1-2 to Level 3-4 as an urgent initiative, not a long-term goal

- Focus on proactive threat intelligence and continuous monitoring

- Reassess quarterly to measure progress

- Use rubric scores to communicate urgency to leadership and boards

The Bottom Line

As educators explore the wonderful things AI can do in the classroom, IT leadership must be vigilant about how those tools may broaden the district’s CVE landscape. The frameworks companies use to power educational AI tools introduce new vulnerabilities that attackers are already exploiting.

This isn’t about stopping AI adoption. It’s about understanding what’s just changed. Every AI tool you adopt expands your attack surface with new CVEs in orchestration layers and integrations. But here’s the bigger threat: AI-powered attacks can now exploit your existing vulnerabilities faster and at greater scale than human attackers ever could. The volume and sophistication of attacks against known exposures will increase dramatically as AI tools proliferate to less sophisticated threat actors. Proactive threat management (Rubric Level 3-4) just became urgent, not optional. Student data protection now requires AI-fluent security practices.

The ground shifted in September. Districts that recognize this and act accordingly will better protect the student data families have entrusted to them. Districts that don’t will find themselves defending against attacks they can’t detect, operating at speeds they can’t match.

Your next step: Complete the Cybersecurity Rubric to see where you stand in the IDENTIFY and DETECT domains. Use the K-12 Cybersecurity Framework (with updated Chapter 2 guidance) to build your threat management approach. And start asking your AI vendors the hard questions about CVE management, misuse detection, and data protection.

Because in K-12, cybersecurity isn’t about defending against every possible threat. It’s about understanding the threats that actually matter, including those powered by AI, and building detection that protects the student data you’re entrusted with.

Join the Conversation

You don’t have to navigate this alone. The K12Leaders Cybersecurity Working Group brings together educators, technology directors, and security professionals for real-time conversations and peer support. When the ground shifts, like it did in September, having a community of practitioners who understand K-12 constraints makes all the difference.

Whether you’re trying to evaluate an AI vendor’s security claims, interpreting your rubric scores, or just need to talk through what “CVE tracking for AI orchestration layers” actually means in practice, the working group is where these conversations happen.

—

The K-12 Cybersecurity Framework provides detailed guidance on understanding threats and building detection capabilities. Chapter 2: The Threat Landscape is being updated to include AI-powered attack vectors, CVE tracking strategies for educational AI tools, and detection methods for AI-generated attacks.

The Cybersecurity Rubric is a free assessment tool designed specifically for K-12 districts. It helps you measure your current state in the IDENTIFY and DETECT domains, identify gaps in threat awareness and detection capabilities, and track your progress as you implement improvements.

k12leaders.com